What is a Checkpoint in the Field of AI ?

Training an AI model can take days, or even weeks, depending on the amount of data provided. This consumes a lot of resources, and it's crucial not to lose all this work in case of a problem. This is where checkpoints come into play.

What is a checkpoint ?

The term "checkpoint" is often heard in video games, where you can save your progress at a given moment, preventing you from having to start over in case of defeat.

In AI, it's basically the same concept. A checkpoint is a save point in the training of an AI model. It allows the training to resume from where it left off, without having to start over. Since training a model can take a long time, just one issue can cause all progress to be lost, and that's probably the last thing anyone wants.

Sharing checkpoints

Checkpoints can also be shared. Sites like CivitAI ist checkpoints for models like Stable Diffusion. These checkpoints are posted by the community, and some are truly interesting.

This saves time, as one can use an already trained model for their own project, without having to do it themselves.

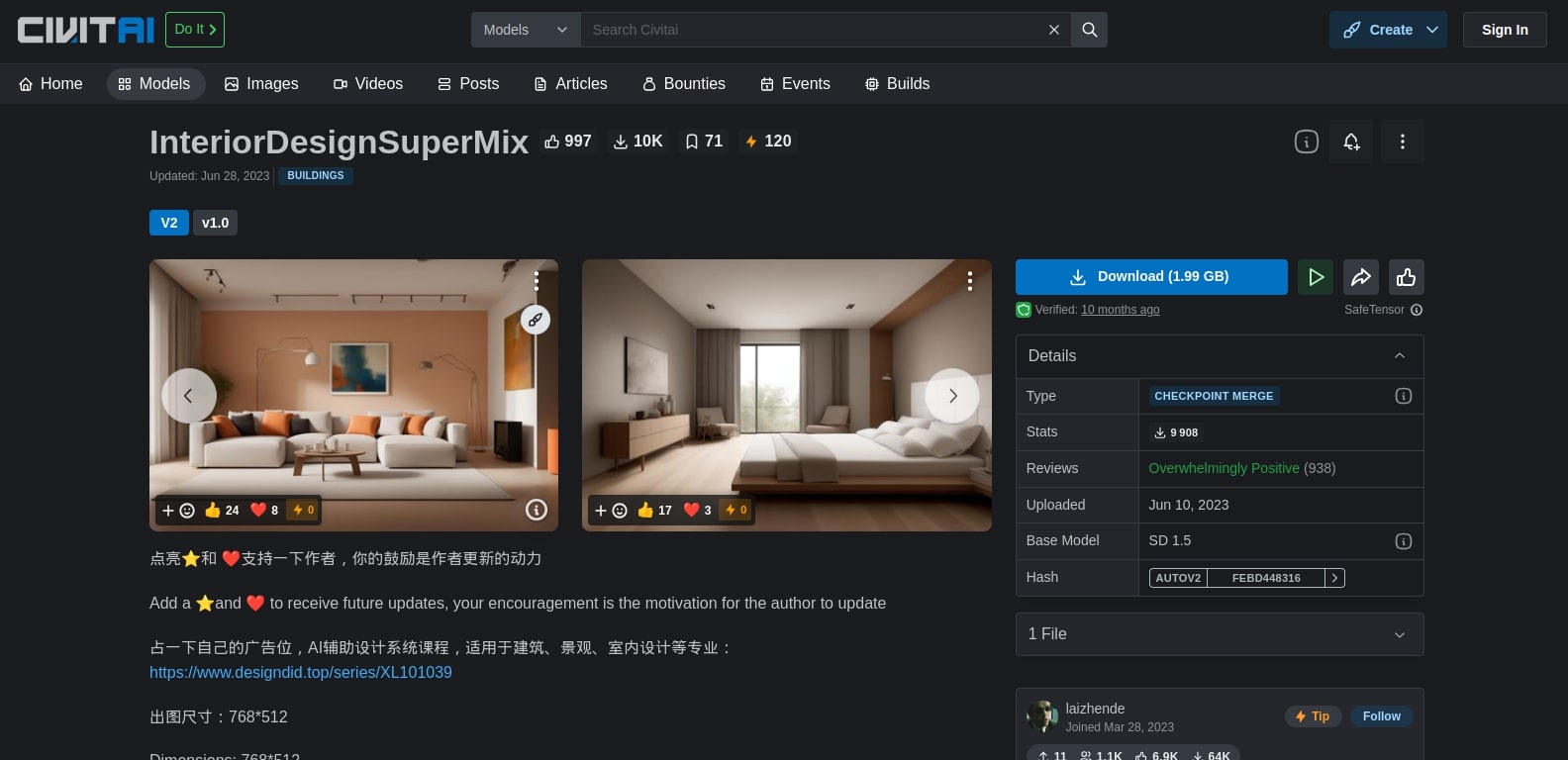

Checkpoint on the CivitAI site

For instance, this checkpoint allows for the generation of interior images of houses. The base AI model used is SD 1.5. A checkpoint is quite a heavy file; this one is about 2 GB. Generally, on CivitAI, checkpoints range from 2 GB to 8 GB for the heavier ones.

Checkpoints, a gateway to democratization ?

Checkpoints undoubtedly lower the barrier to entry for AI projects. Training a model is often the biggest challenge, having an already trained model allows focusing on the application of the model rather than its training. In essence, checkpoints are not just a technical tool; they embody a vision of open and shared AI, essential for shaping a future where technology benefits everyone.